Arlington Public Schools Career Expo: February 7, 2026!

from the Marymount University blog:

from ARLnow:

County school leaders have announced growth plans for the Arlington Tech program, which will include a doubling of the student body by the 2029-30 school year.

At the same time, Arlington Public Schools confirmed Friday, Jan. 16 at 4 p.m. as the application deadline for county students interested in vying for spots at the Arlington Tech program — and other option schools and programs — to have their applications submitted.

County school leaders will use the opening of the new Grace Hopper Center in August to provide the additional space needed for expansion of the Arlington Tech program from 500 to 1,000 students in a four-year process.

According to school leaders:

“This expansion ensures that more students can access Arlington Tech, which blends rigorous academic instruction with innovative Career and Technical Education (CTE) pathways in STEM fields and emerging technologies, through project-based learning, dual-enrollment pathways towards an associate’s degree and year-long senior ‘capstone’ through internships with industry partners.”

APS leaders said the expansion comes at a critical time for preparing students for

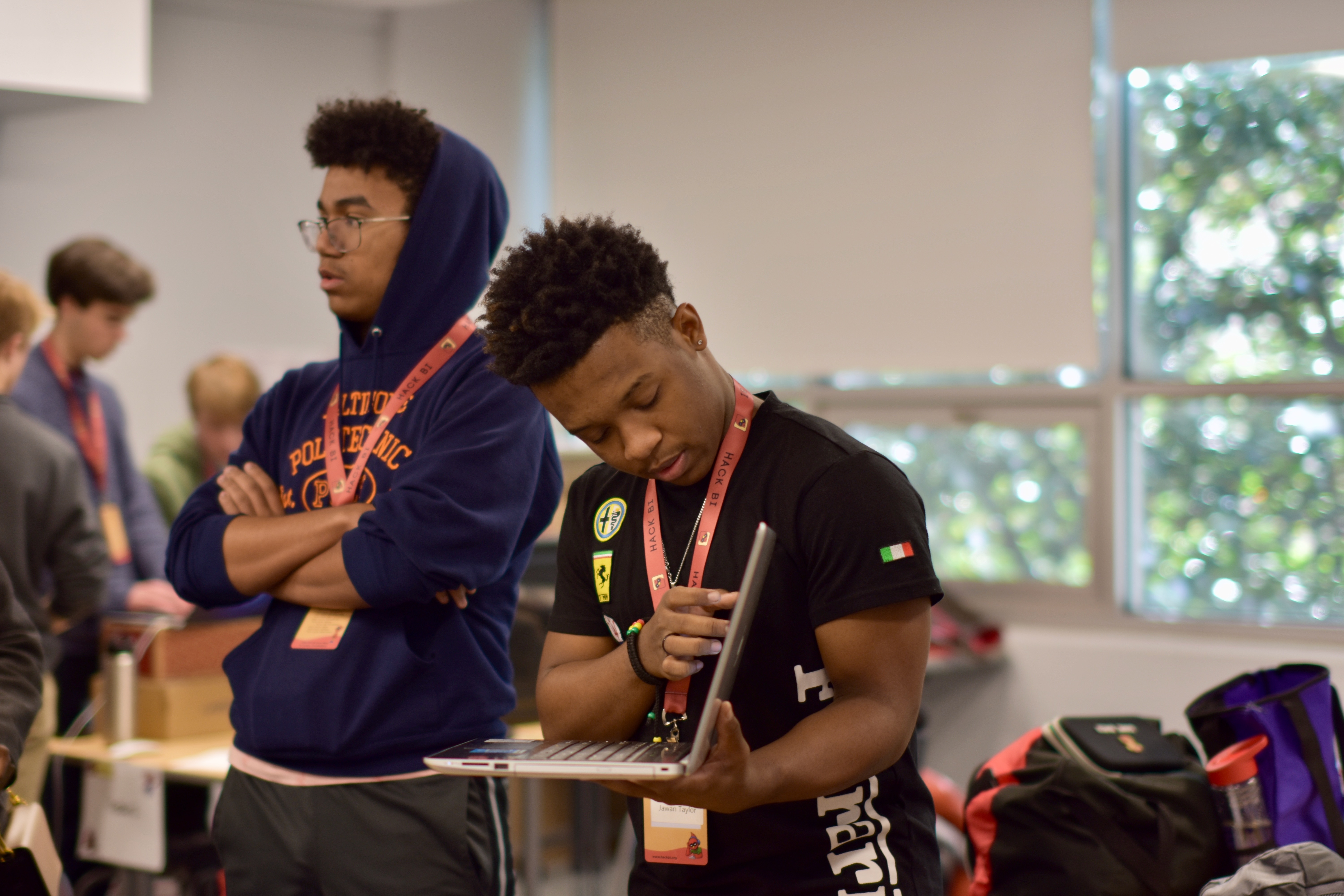

HackBI is an annual hackathon for middle and high school students that has been run by the students of Bishop Ireton High School for 9 consecutive years. At HackBI, you will learn new things and collaborate with others to make your ideas come to life. Check out our FAQ if you have any questions.

Read details at

www.childsci.org/get-involved/internship

The Youth Development Initiative strives to develop and implement successful

internships opportunities that provide students with the skills and experiences needed

to obtain and maintain employment. These internships utilize a combination of formal

instruction, mentoring, hands-on STEM experience, project based learning, and

continuous feedback. It is the Science Center’s vision to continue growing this program

into a gateway experience to internships and potential employment with local

companies with a focus on underrepresented populations. The Science Center’s Youth Development Initiative includes an extensive variety of internships and leadership programs for high school and college-aged youth. Since 2015, the Science Center has engaged over 300 interns through youth development programs in the community and at the Lab. For important questions, email internships@childsci.org.

The Science Center operates internship and leadership programs throughout the year:

A letter to teens about AI and jobsSome practical advice about what you should to be on the right side of disruption

Episode six in our YouTube series, “Raising Kids in the Age of AI” focused on “Preparing kids for careers in an AI world.” It’s by far the most popular. That episode was tailored to parents, but I decided that I want to write something directly for the kids themselves on the topic. After all, Gen Z is probably more clued in to the growing chorus about the impact of AI on jobs than anyone else. A note to parents and educators: This letter is written directly to teens—specifically a rising senior in high school—but it applies to anyone wondering how to make decisions about their future. If you have a young person in your life navigating these questions, consider sharing this with them. Or read it yourself; the framing might help you give better advice. Either way, I hope it’s useful. Here’s the TL;DR: AI is real and will massively disrupt the job market right as you’re starting your career. But every industrial revolution has created more jobs than it destroyed. The key is being on the right side of the transition. The old playbook is dead: “pick the ‘safe’ major, follow the predictable path. Instead, |